BioUnfold #10 — Assay Optimization and the Upper Bound of Discovery

Every discovery program begins with a biological hypothesis — but it advances only as far as the signal allows.

Assay optimization defines how biology becomes measurable: which readouts matter, which conditions are stable, and what counts as a meaningful difference. It is where “how do we test this?” quietly decides whether an idea will ever be testable again — and where the opportunity arises to capture more than you planned for.

From Hypothesis to Measurement

Most assays begin the same way: If we reduce this transcription factor, tumor proliferation should decrease.

That logic already defines the signal type — an imaging assay measuring nuclear intensity, perhaps normalized to cell count. This framing works; almost every program starts here. The missed opportunity is to leave it there — to measure only what the hypothesis names and overlook the rest of the biological context the system exposes.

AI expands that context. It can detect subtle localization shifts, shape patterns, or texture gradients that are biologically meaningful but invisible to manual scoring. By exploring those secondary signals, teams often discover better biomarkers — or at least gain a clearer picture of what the assay is truly measuring.

The goal is not transparency or simplicity. It is to make the signal reliable, QC-able, and testable — stable enough that both biologists and algorithms can trust what they see.

Building a Reliable Signal

Before optimization begins, biology must hold its ground. The disease model — cell type, culture conditions, timing — is the foundation; it defines what can and cannot be observed. Then comes the biomarker choice: which measurable aspect of the system best reflects the underlying hypothesis?

This step is still deeply biological, but AI can assist by modeling mechanistic dependencies and contextual behaviors — the same way it supports target identification. Once a plausible marker is chosen, AI helps test its stability:

- Does it hold across replicates and plates?

- Is it sensitive enough to capture subtle changes without drifting into noise?

At this point, assay optimization becomes a dialogue between experimental craftsmanship and signal engineering.

The Trade-offs

Every optimization effort faces the same question: How many channels or stains should we include?

More channels increase what the model can learn, but they add cost and complexity. Sometimes an extra stain improves performance by only a few percent — not enough to justify doubling assay time or data volume. At that point, it becomes a design problem: finding the right balance between expressiveness and reproducibility.

Across my past experience, I have seen both philosophies work. Some programs favor richer imaging, capturing multi-channel phenotypes to map fine-grained states. Others prioritize scalability and automation, relying on fewer, more stable readouts. Each is valid if aligned with what the program is trying to learn — depth or throughput, exploration or production.

The AI as Diagnostic

AI’s real contribution to assay optimization is diagnostic rather than predictive. It quantifies how consistent or discriminative a signal is, identifies hidden correlations, and warns when interventions overshoot the biology. Used this way, AI becomes part of the design toolkit — helping define what counts as measurable and what can safely be ignored.

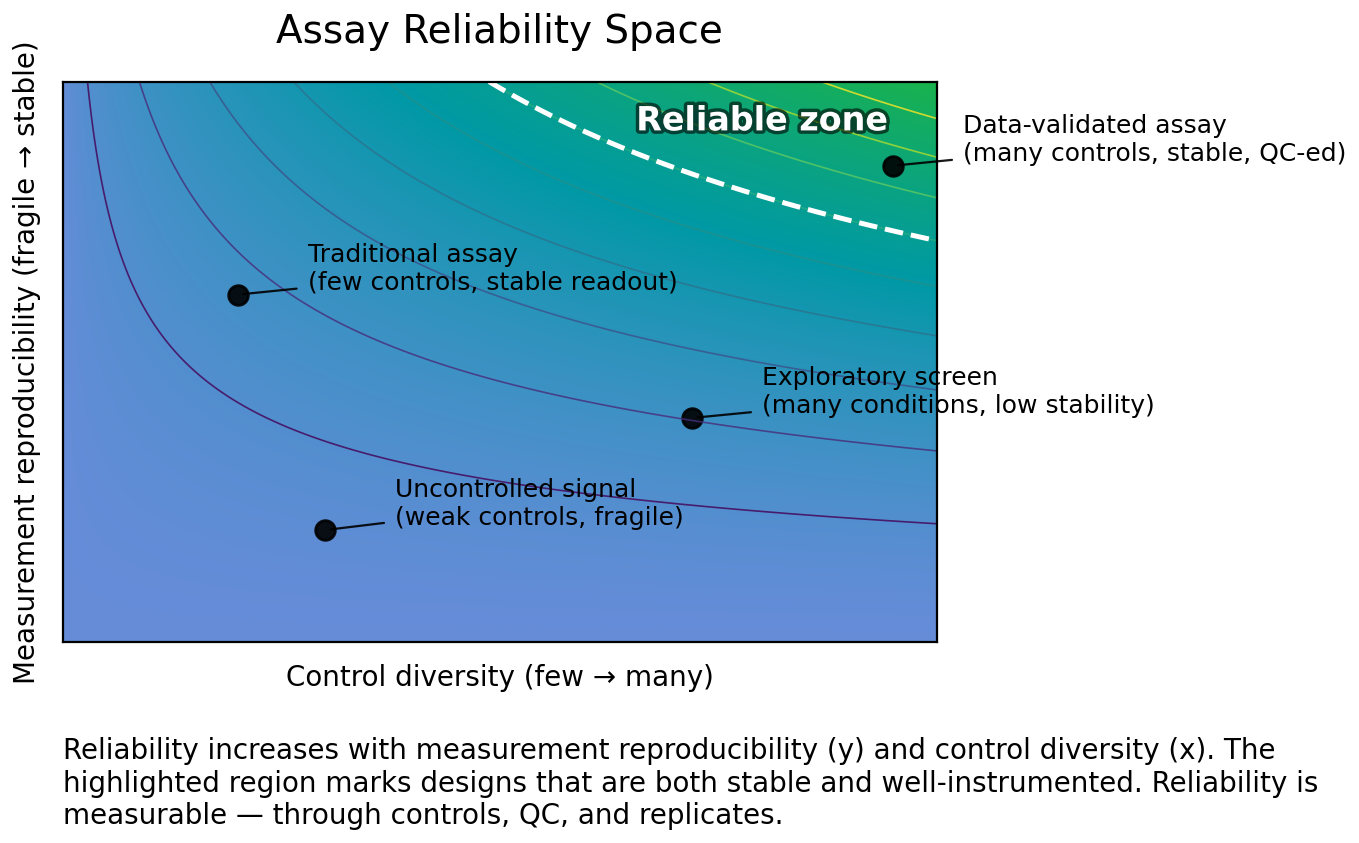

A robust assay does not need to be simple or fully transparent. It needs to be reliable — reproducible, data-validated, and scientifically defensible. That reliability depends on aligning two forms of stability:

- Biological stability — the experiment behaves consistently.

- Computational stability — the signal is separable from noise under normal variation.

When those align, the program gains something fundamental: confidence that the signal means what it claims to mean. And once that reliability is achieved, it does more than stabilize the assay — it defines the limits of what the entire discovery engine can perceive.

The Upper Bound of Discovery

Assay optimization defines the upper bound of signal your discovery program can ever capture. Every downstream analysis — from hit discovery to modeling — inherits its ceiling from the quality of this step.

The completeness of your data begins here: where biology becomes measurable, AI becomes diagnostic, and reliability becomes strategy. That is where discovery truly starts — in the design of what can be trusted.